TABLE OF CONTENTS:

Written by: Piotr Strzałkowski (Expert, Embedded Domain)

I have met with a wrong understanding of software quality far too many times, both in general terms and particularly in the field of embedded systems. In this article, I would like to deal with some myths surrounding this subject and make the notion more clear for everyone interested in developing and software testing (embedded). So, what is software quality?

The definitions may vary wildly depending on whom you ask. Specialists often assume the ones that fit their particular areas of expertise. For example, a developer might say it means clean code with a cohesive naming convention, consistent formatting throughout the project, and possibly no coding errors. A UI designer, on the other hand, will probably focus on a clean, efficient, frontend, characterized by high accessibility and modern visual themes. So which approach is the right one?

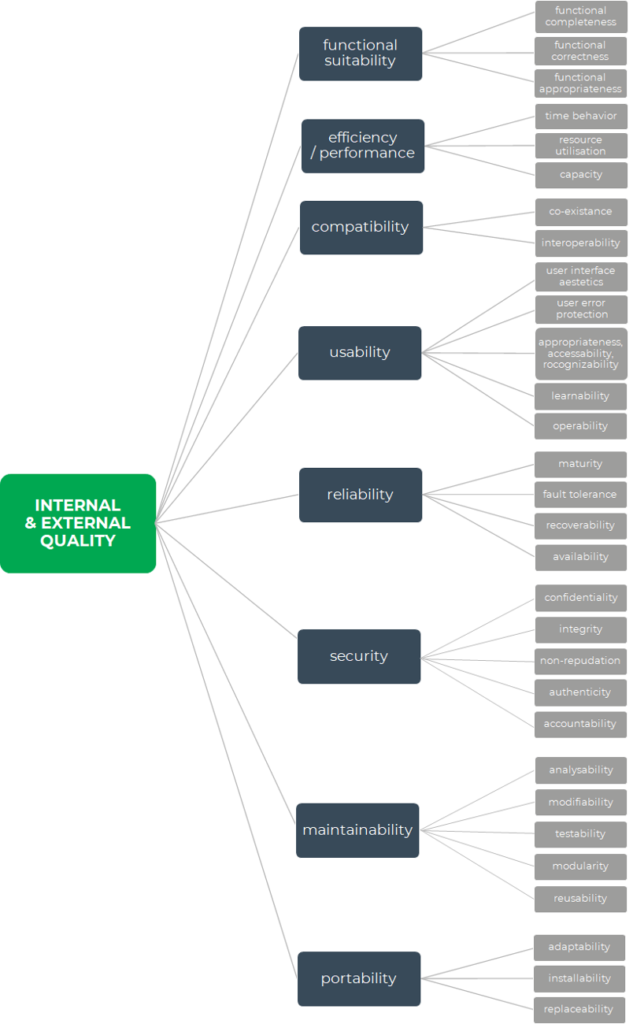

The actual definition can be found in the most recent version of the system and program engineering ISO 25010 standard, which gives the following image:

As we can see, software quality has been described as a set of characteristics and sub-characteristics required for a piece of software to be considered of high quality.

It is important to remember that following the highest metrics blindly for all of the features may lead to a dire consequence, and, as always, a reasonable balance is the best choice. For example, in embedded systems, not all of them can be met when the system is not based on any operating system or does not co-operate with other systems.

The following is a list of all terms provided by the standard and a short description for each of them. Note, that the degree to which the characteristics are met, is always related to the specified requirements.

To assess the quality of given software the project needs to include proper numerical metrics. In this case, the ones described by the standard. But almost every parameter of a project can be regarded as a metric and used for monitoring development. Does it mean we should use all of them to have the highest level of analytics? Of course not, in this case, more does not mean better. It is crucial to keep the rational balance in both the number and type of the introduced metrics, for example utilizing the SMART method of metric selection, which states that a good metric is:

If we know what metrics are, it is enough to pick some of them. Easier said than done. There are many types of metrics describing various aspects of software systems, so choosing the right set for the project is not an easy task. We can measure code test coverage, count the lines of code (CLOC), code errors per module, function resolutions, function executions, functions triggered within a function, we can even measure the rate of comments per file.

The right approach requires balancing between how extensive you want your monitoring to be and how much effort you are ready to make performing said monitoring. What is more, in some cases the assumed numbers for a metric need to take into account the complexity of the solution that needs to be implemented to achieve the given level of quality.

| Reliability | the number and severity of code errors

|

| Maintainability |

|

| Portability |

|

| Reusability |

|

It is also worth mentioning that one metric may to some extent contribute to multiple quality characteristics.

Code quality is arguably one of the most important areas defining software quality. Therefore, it should be one of our first considerations when going from theory to practice. Here, some metrics are free and easy to use – just turn on the right options in the compiler and prepare the process of their repeated monitoring. Other metrics require implementing additional software, such as CppCheck for static code analysis and revision, and the right configuration to make the monitoring process possible. Both types are well worth using, especially if they can provide more information without additional effort – having static code analysis at our disposal we can monitor the quality of code syntax, but at the same time utilize a coding standard, such as MISRA, as an additional benefit.

The next step would be introducing unit tests and the process for both functional and non-functional software testing, preferably, with the right division into proper levels. Of course, this stage is highly dependent on the size and quality requirements of the project, and everything beyond, even more so.

To sum up, a well-adjusted set of techniques, tests, and analyses may provide you with metrics, describing the chosen quality characteristics and sub-characteristics of the system in numbers. Consequently, you gain the ability to monitor the quality of the software under development. But we need to remember that software quality is not just good code with few errors, it is not just a well-drawn graphical interface, nor is it a title we get once our product achieves certain goals. It is constant monitoring and analysis of current parameters and trends throughout the whole development process.

Therefore, including some metrics does mean additional costs, especially if highlighting the importance of the measured areas makes the team more prone to prioritizing them over the others, often against better judgment. Metrics should be introduced only after an in-depth analysis reaching outside of the developer team. It should aim at synergizing the business needs of the product with the maturity and comfort of development itself.

It will also never be independent of the people creating the software, no metric will ever substitute, nor should it, for the developers’ involvement and expertise. A good team is half the battle.